Before we dive into the complexities of backlink analysis and its strategic implementation, it is vital to establish a clear philosophical foundation. This foundational understanding is intended to enhance our approach to developing effective backlink campaigns, ensuring that our methodology is transparent as we explore this intricate subject matter.

In the competitive landscape of SEO, we prioritize the reverse engineering of our competitors’ strategies. This essential step not only yields valuable insights but also forms the basis for a comprehensive action plan that will direct our optimization initiatives.

Navigating the intricacies of Google’s algorithms can prove to be quite challenging, especially since we often rely on limited informational resources such as patents and quality rating guidelines. Although these tools can inspire innovative SEO testing ideas, we must approach them with a healthy dose of skepticism, refraining from accepting them at face value. The applicability of older patents in relation to today’s ranking algorithms remains uncertain, which underscores the importance of gathering insights, conducting tests, and validating our assumptions based on current, relevant data.

The SEO Mad Scientist acts like a detective, utilizing these clues to formulate tests and experiments. While this layer of understanding is important, it should only constitute a small part of your overall SEO campaign strategy.

Next, we emphasize the significance of competitive backlink analysis as a cornerstone of effective SEO practices.

I assert a statement that stands firm: reverse engineering successful elements within a SERP is the most effective strategy for guiding your SEO optimizations. This approach is unmatched in its efficacy.

To further elucidate this concept, let’s revisit a basic principle from seventh-grade algebra. Solving for ‘x’ or any variable requires evaluating existing constants and implementing a sequence of operations to determine the variable’s value. By observing our competitors’ tactics, we can analyze the topics they cover, the links they secure, and their keyword densities.

However, while amassing hundreds or thousands of data points may seem advantageous, much of this information may lack significant insights. The true value of analyzing larger datasets lies in identifying patterns that correlate with ranking fluctuations. For many, a streamlined list of best practices derived from reverse engineering will be sufficient for effective link building.

The final aspect of this strategy involves not merely achieving a level of parity with competitors but also aiming to surpass their performance. While this may appear daunting, particularly in highly competitive niches where matching top-ranking sites could take years, achieving baseline parity is merely the first phase. A thorough, data-driven backlink analysis is crucial for ensuring success.

Once this baseline is established, your objective should be to outshine competitors by providing Google with the appropriate signals to enhance rankings, ultimately securing a prominent position within the SERPs. It is unfortunate that these vital signals often distill down to common sense within the realm of SEO.

While I find this notion somewhat unappealing due to its subjective nature, it is critical to acknowledge that experience, experimentation, and a proven track record of SEO success contribute significantly to the confidence required to identify where competitors falter and how to address those gaps in your planning process.

5 Actionable Steps for Mastering Your SERP Ecosystem

By thoroughly exploring the intricate ecosystem of websites and backlinks that contribute to a SERP, we can uncover a trove of actionable insights essential for crafting a robust link plan. In this section, we will systematically organize this information to pinpoint valuable patterns and insights that will bolster our campaign.

Let’s take a moment to discuss the rationale behind organizing SERP data in this systematic manner. Our approach emphasizes conducting a thorough analysis of the top competitors, yielding a comprehensive narrative as we delve deeper into the subject.

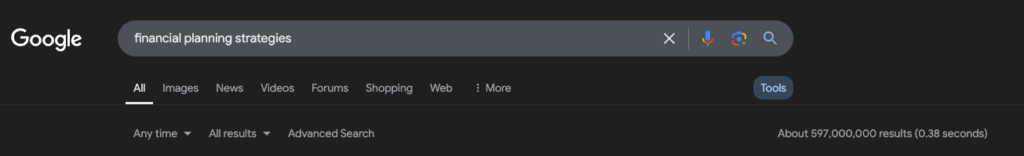

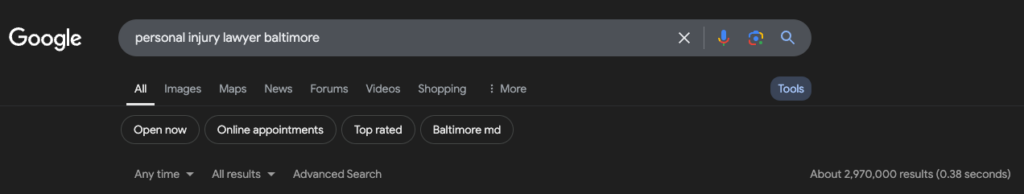

Conducting a few searches on Google will reveal an overwhelming volume of results, often exceeding 500 million. For instance:

Although our analysis primarily focuses on the top-ranking websites, it’s important to recognize that the links directed toward even the top 100 results can hold significant statistical relevance, provided they are not spammy or irrelevant.

My goal is to gain comprehensive insights into the factors that influence Google’s ranking decisions for leading sites across various queries. With this information at hand, we are better positioned to develop effective strategies. Here are just a few key objectives we can achieve through this analysis.

1. Uncover Key Links That Impact Your SERP Ecosystem

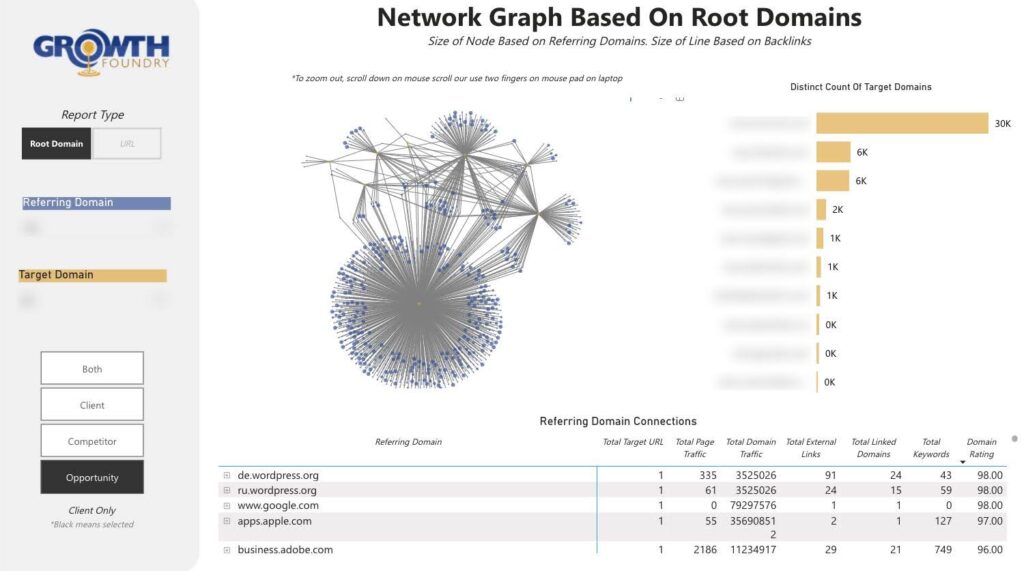

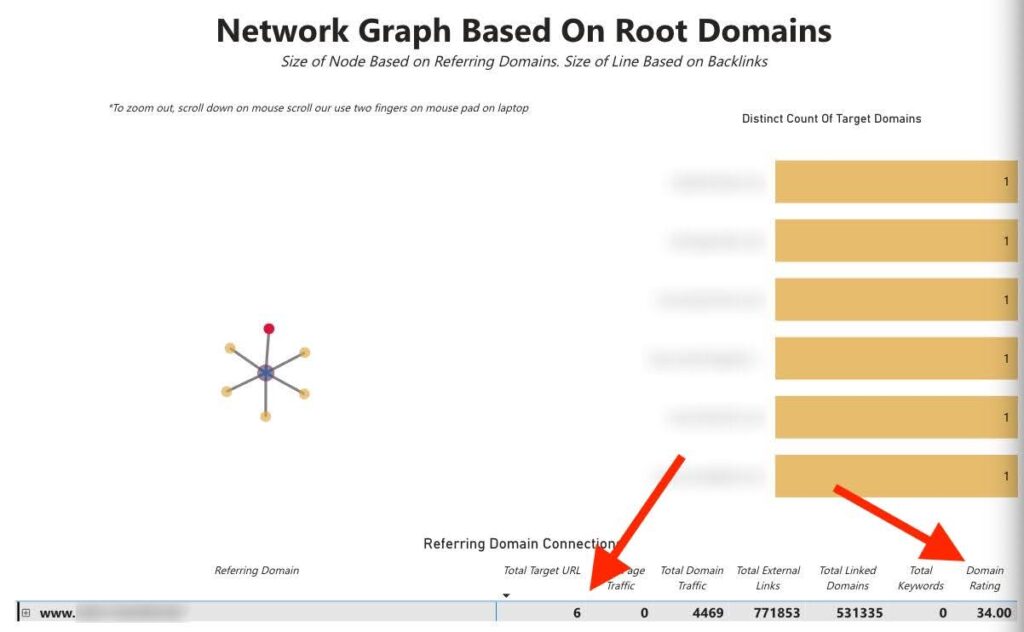

In this context, a key link is defined as a link that frequently appears in the backlink profiles of our competitors. The image below illustrates this, showing that specific links direct to almost every site in the top 10. By broadening the analysis to encompass a wider range of competitors, you can uncover even more intersections like the one demonstrated here. This strategy is supported by solid SEO theory, as evidenced by several reputable sources.

- https://patents.google.com/patent/US6799176B1/en?oq=US+6%2c799%2c176+B1 – This patent enhances the original PageRank concept by incorporating topics or context, recognizing that different clusters (or patterns) of links carry varying significance based on the subject area. It serves as an early illustration of Google refining link analysis beyond a singular global PageRank score, indicating that the algorithm detects patterns of links among topic-specific “seed” sites/pages and utilizes that information to adjust rankings.

Essential Quote Excerpts for In-Depth Backlink Analysis

Implication: Google identifies distinct “topic” clusters (or groups of sites) and employs link analysis within those clusters to generate “topic-biased” scores.

While it doesn’t explicitly state “we favor link patterns,” it suggests that Google examines how and where links emerge, categorized by topic—a more nuanced approach than relying solely on a universal link metric.

“…We establish a range of ‘topic vectors.’ Each vector ties to one or more authoritative sources… Documents linked from these authoritative sources (or within these topic vectors) earn an importance score reflecting that connection.”

Insightful Quote from Pioneering Research Paper on Link Analysis

“An expert document is focused on a specific topic and contains links to numerous non-affiliated pages on that topic… The Hilltop algorithm identifies and ranks documents that links from experts point to, enhancing documents that receive links from multiple experts…”

The Hilltop algorithm aims to identify “expert documents” for a topic—pages recognized as authorities in a specific field—and analyzes who they link to. These linking patterns can convey authority to other pages. While not explicitly stated as “Google recognizes a pattern of links and values it,” the underlying principle suggests that when a group of acknowledged experts frequently links to the same resource (pattern!), it constitutes a strong endorsement.

- Implication: If several experts within a niche link to a specific site or page, it is perceived as a strong (pattern-based) endorsement.

Although Hilltop is an older algorithm, it is believed that aspects of its design have been integrated into Google’s broader link analysis algorithms. The concept of “multiple experts linking similarly” effectively shows that Google scrutinizes backlink patterns.

I consistently seek positive, prominent signals that recur during competitive analysis and aim to leverage those opportunities whenever feasible.

2. Backlink Analysis: Pinpointing Unique Link Opportunities with Degree Centrality

The journey to identify valuable links that achieve competitive parity begins with a thorough analysis of the top-ranking websites. Manually sifting through numerous backlink reports from Ahrefs can be a daunting task. Furthermore, delegating this task to a virtual assistant or team member may create a backlog of ongoing responsibilities.

Ahrefs offers users the ability to input up to 10 competitors into their link intersect tool, which I believe is one of the best tools for link intelligence available today. This tool enables users to simplify their analysis if they are comfortable with its complexity.

As mentioned earlier, our focus is on expanding our reach beyond the standard list of links that other SEOs are targeting in order to achieve parity with the top-ranking websites. This approach allows us to establish a strategic advantage during the initial planning stages as we aim to influence the SERPs.

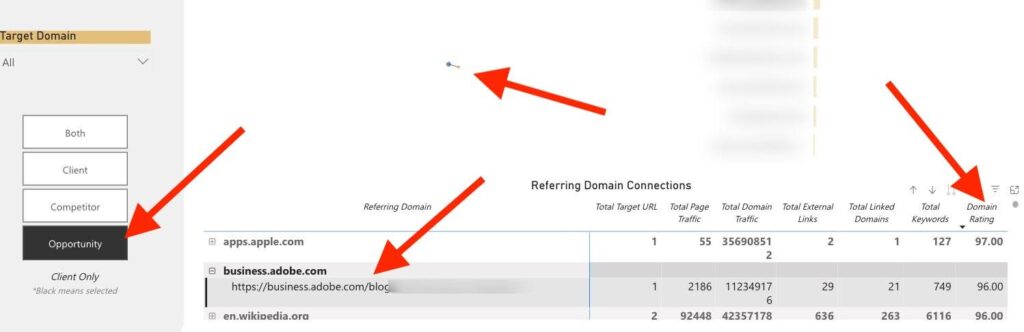

Thus, we implement various filters within our SERP Ecosystem to identify “opportunities,” defined as links that our competitors possess but we do not.

This process allows us to efficiently identify orphaned nodes within the network graph. By organizing the data table by Domain Rating (DR)—while I’m not particularly fond of third-party metrics, they can be useful for quickly identifying valuable links—we can discover powerful links to incorporate into our outreach workbook.

3. Efficiently Organize and Control Your Data Pipelines

This strategy facilitates the seamless addition of new competitors and their integration into our network graphs. Once your SERP ecosystem is established, expanding it becomes a straightforward process. You can also eliminate unwanted spam links, blend data from various related queries, and manage a comprehensive database of backlinks.

Effectively organizing and filtering your data is the first step toward generating scalable outputs. This level of detail can unveil countless new opportunities that may have otherwise gone unnoticed.

Transforming data and creating internal automations while introducing additional analytical layers can foster the development of innovative concepts and strategies. Personalize this process, and you will uncover numerous use cases for such a setup, far beyond what can be covered in this article.

4. Uncover Mini Authority Websites with Eigenvector Centrality

In the context of graph theory, eigenvector centrality posits that nodes (websites) gain significance as they connect to other important nodes. The greater the importance of the neighboring nodes, the higher the perceived value of the node itself.

This may not be beginner-friendly, but once the data is organized within your system, scripting to uncover these valuable links becomes a straightforward task, and even AI can assist you in this process.

5. Backlink Analysis: Utilizing Disproportionate Competitor Link Distributions

Although this concept may not be novel, analyzing 50-100 websites in the SERP and identifying the pages that attract the most links is an effective method for extracting valuable insights.

We can focus solely on the “top linked pages” on a site, but this approach often yields limited beneficial information, particularly for well-optimized websites. Generally, you will observe a handful of links directed toward the homepage and primary service or location pages.

The optimal strategy is to concentrate on pages with a disproportionate number of links. To achieve this programmatically, you’ll need to filter these opportunities using applied mathematics, with the specific methodology left to your discretion. This task can be challenging, as the threshold for outlier backlinks can vary significantly based on overall link volume—for example, a 20% concentration of links on a site with only 100 links versus one with 10 million links represents a drastically different scenario.

For instance, if a single page attracts 2 million links while hundreds or thousands of other pages collectively gather the remaining 8 million, it indicates that we should reverse-engineer that particular page. Was it a viral sensation? Does it provide a valuable tool or resource? There must be a compelling reason behind the influx of links.

Backlink Analysis: Understanding Unflagged Scores

With this actionable data, you can start investigating why certain competitors are acquiring unusual amounts of backlinks to specific pages on their site. Use this understanding to inspire the creation of content, resources, and tools that users are likely to link to.

The utility of data is expansive. This justifies investing time in developing a process to analyze larger datasets of link data. The opportunities available for you to capitalize on are virtually limitless.

Backlink Analysis: A Comprehensive Step-by-Step Guide for Crafting an Effective Link Plan

Your initial step in this process involves sourcing reliable backlink data. We highly recommend Ahrefs due to its consistently superior data quality compared to its competitors. However, if feasible, combining data from multiple tools can significantly enhance your analysis.

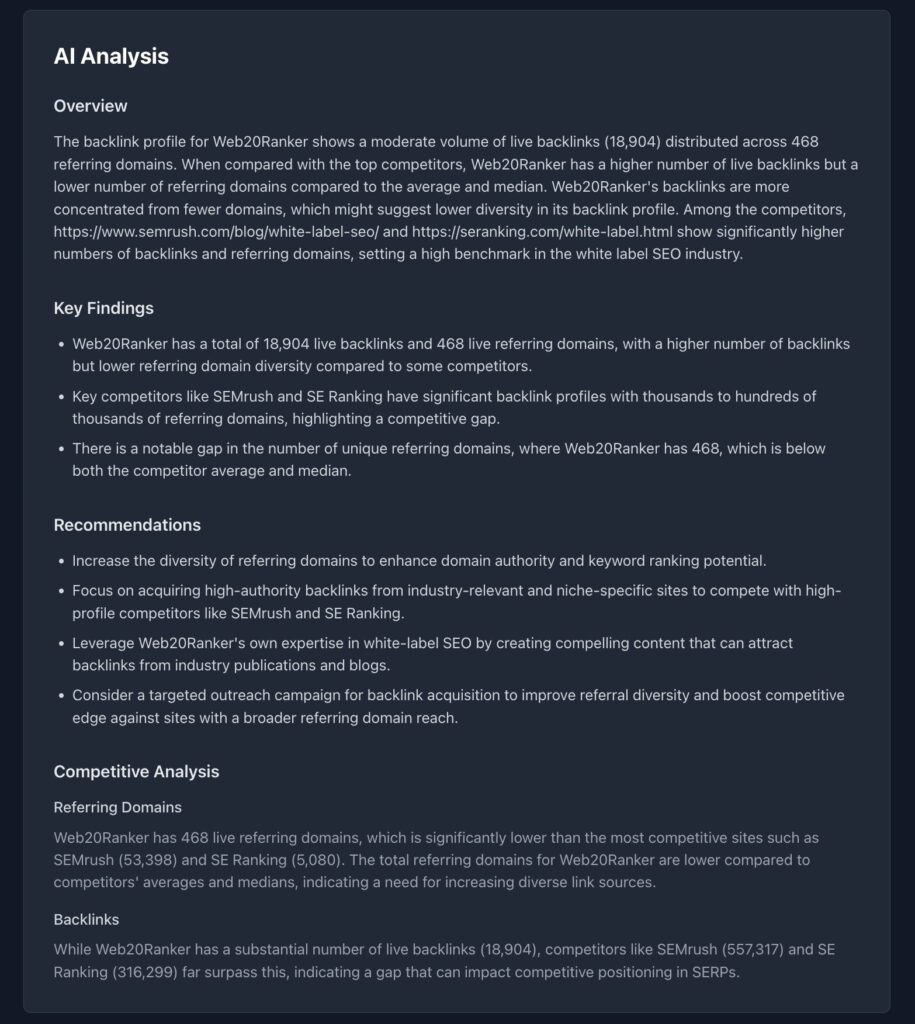

Our link gap tool serves as an excellent solution. Simply input your site, and you’ll receive all the essential information:

- Visualizations of link metrics

- URL-level distribution analysis (both live and total)

- Domain-level distribution analysis (both live and total)

- AI-driven analysis for deeper insights

Map out the exact links you’re missing—this focus will help you close the gap and strengthen your backlink profile with minimal guesswork. Our link gap report provides more than just graphical data; it also includes an AI analysis, offering an overview, key findings, competitive analysis, and link recommendations.

It’s common to discover unique links on one platform that aren’t available on others; however, consider your budget and your ability to process the data into a cohesive format.

Next, you will require a data visualization tool. There’s no shortage of options available to help you achieve your objectives. Here are a few resources to assist you in selecting the right one:

The Article Backlink Analysis: A Data-Driven Strategy for Effective Link Plans Was Found On https://limitsofstrategy.com

References:

Backlink Analysis: A Data-Driven Strategy for Effective Link Plans

Multivitamins vs. Food Nutrients: Essential Insights for You

Core Web Vitals for Effective SEO Strategies and Optimisation

Probiotic Supplements: Our Top-Rated Choices